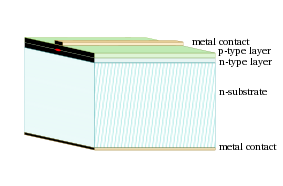

A laser diode is formed by doping a very thin layer on the surface of a crystal wafer. The crystal is doped to produce an n-type region and a p-type region, one above the other, resulting in a p-n junction, or diode.The structure, a lasing medium between two conductive partial mirrors, is simple:

...

When an electron and a hole are present in the same region, they may recombine or "annihilate" with the result being spontaneous emission — i.e., the electron may re-occupy the energy state of the hole, emitting a photon with energy equal to the difference between the electron and hole states involved. Spontaneous emission gives the laser diode below lasing threshold similar properties to an LED.

The physical chemistry is more complicated, and it took a lot of research to find out how to get it right so they work at room temperature, are cheap, and last a while.

This is a visible light micrograph of a laser diode taken from a CD-ROM drive. Visible are the P and N layers distinguished by different colours. Also visible are scattered glass fragments from a broken collimating lens.

The first laser diode to achieve continuous wave operation was a double heterostructure demonstrated in 1970 essentially simultaneously by Zhores Alferov and collaborators (including Dmitri Z. Garbuzov) of the Soviet Union, and Morton Panish and Izuo Hayashi working in the United States. However, it is widely accepted that Zhores I. Alferov and team reached the milestone first. For their accomplishment and that of their co-workers, Alferov and Kroemer shared the 2000 Nobel Prize in Physics.

David Byrne, Lead Singer of Talking Heads in 1987:

I don't think computers will have any important effect on the arts in 2007. When it comes to the arts they're just big or small adding machines. And if they can't "think," that's all they'll ever be. They may help creative people with their bookkeeping, but they won't help in the creative process.

Simultaneous localization and mapping (SLAM) on an iPad2:

The Singularity is Far: A Neuroscientist's View, including many interesting thoughts in the comments.

Art && Code: 3D

Kinect-Hacking Conference

Art && Code: 3D is a festival-conference about the artistic, technical, tactical and cultural potentials of 3D scanning and sensing devices — especially (but not exclusively) including the revolutionary Microsoft Kinect sensor. This highly interdisciplinary event will bring together, for the first time, tinkerers and hackers, computational artists and designers, industrial game developers, and leading researchers from the fields of computer vision, HCI and robotics. Half-maker’s festival, half-academic symposium, Art && Code: 3D will take place October 21-23 at Carnegie Mellon University in Pittsburgh, and will feature:

- Hands-on workshops in programming interactive software with the Kinect, using popular arts-engineering toolkits such as Processing, openFrameworks, Cinder, Max/MSP/Jitter, Pure Data, and Microsoft’s own Kinect for Windows SDK in Silverlight.

- Presentations by leading artists, designers, and researchers about their projects with depth cameras.

- An interactive exhibition and live performance evening featuring artworks, robotics, games and other experiences using the Kinect.

Omek Raises $7 Million From Intel, Aims To Challenge Microsoft’s Kinect

Omek Interactive, a provider of tools that enables companies to incorporate gesture recognition and full body tracking into their applications and devices, has secured $7 million in financing in a round led by Intel Capital, TechCrunch has learned. The Series C round brings the company’s total funding raised to nearly $14 million.

Omek’s Beckon technology converts the raw depth map data from most major 3D cameras into an awareness of people and their movements or positions in front of the camera, enabling them to be converted into commands that control hardware or software.

Solving laundry at UC Berkeley (Willow Garage)

Nearly a million people have watched UC Berkeley's PR2 folding towels and sorting socks on YouTube, and it's easy to understand why: having a robot that can do your laundry is a fantasy that's been around since The Jetsons, and while we're not there yet, it's not nearly as far off a future as it was before the PR2 Beta Program. Since those demos, one of the research groups at Berkeley has been working on ways of making the laundry cycle faster, more efficient, and more complete, and for starters, they've taught their PR2 to reliably handle your pants.

The goal of Pieter Abbeel’s group is to teach a robot to solve the laundry problem. That is, to develop a system to enable a robot to go into a home it's never seen before, load and unload a washer and dryer, and then fold the clean clothes and put them away just like you would. The first aspect of this problem that the group tackled was folding, which is one of those things that seems trivial to us but is very difficult for a robot to figure out since clothes are floppy, unpredictable, and often decorated with tasteless and complicated colors and patterns.

In Search of a Robot More Like Us

Although robots have made great strides in manufacturing, where tasks are repetitive, they are still no match for humans, who can grasp things and move about effortlessly in the physical world. Designing a robot to mimic the basic capabilities of motion and perception would be revolutionary, researchers say, with applications stretching from care for the elderly to returning overseas manufacturing operations to the United States (albeit with fewer workers).7x21 pixel display, and is 2.5 x 7 feet in size, using an Arduino Decimillia board.

Yet the challenges remain immense, far higher than artificial intelligence hurdles like speaking and hearing.

No comments:

Post a Comment